Radical Empiricism and Machine Learning Research

A speaker at a lecture that I have attended recently summarized the philosophy of machine learning this way: “All knowledge comes from observed data, some from direct sensory experience and some from indirect experience, transmitted to us either culturally or genetically.”

The statement was taken as self-evident by the audience, and set the stage for a lecture on how the nature of “knowledge” can be analyzed by examining patterns of conditional probabilities in the data. Naturally, it invoked no notions such as “external world,” “theory,” “data generating process,” “cause and effect,” “agency,” or “mental constructs” because, ostensibly, these notions, too, should emerge from the data if needed. In other words, whatever concepts humans invoke in interpreting data, be their origin cultural, scientific or genetic, can be traced to, and re-derived from the original sensory experience that has endowed those concepts with survival value.

Viewed from artificial intelligence perspective, this data-centric philosophy offers an attractive, if not seductive agenda for machine learning research: In order to develop human level intelligence, we should merely trace the way our ancestors did it, and simulate both genetic and cultural evolutions on a digital machine, taking as input all the data that we can possibly collect. Taken to extremes, such agenda may inspire fairly futuristic and highly ambitious scenarios: start with a simple neural network, resembling a primitive organism (say an Amoeba), let it interact with the environment, mutate and generate offsprings; given enough time, it will eventually emerge with an Einstein’s level of intellect. Indeed, ruling out sacred scriptures and divine revelation, where else could Einstein acquire his knowledge, talents and intellect if not from the stream of raw data that has impinged upon the human race since antiquities, including of course all the sensory inputs received by more primitive organisms preceding humans.

Before asking how realistic this agenda is, let us preempt the discussion with two observations:

(1) Simulated evolution, in some form or another, is indeed the leading paradigm inspiring most machine learning researchers today, especially those engaged in connectionism, deep learning and neural networks technologies which deploy model-free, statistics-based learning strategies. The impressive success of these strategies in applications such as computer vision, voice recognition and self-driving cars has stirred up hopes in the sufficiency and unlimited potentials of these strategies, eroding, at the same time, interest in model-based approaches.

(2) The intellectual roots of the data-centric agenda are deeply grounded in the empiricist branch of Western philosophy, according to which sense-experience is the ultimate source of all our concepts and knowledge, with little or no role given to “innate ideas” and “reason” as sources of knowledge (Markie, 2017). Empiricist ideas can be traced to the ancient writings of Aristotle, but have been given prominence by the British empiricists Francis Bacon, John Locke, George Berkeley and David Hume and, more recently, by philosophers such as Charles Sanders Pierce, and William James. Modern connectionism has in fact been viewed as a Triumph of Radical Empiricism over its rationalistic rivals (Buckner 2018; Lipton, 2015). It can definitely be viewed as a testing grounds in which philosophical theories about the balance between empiricism and innateness can be submitted to experimental evaluation on digital machines.

The merits of testing philosophical theories notwithstanding, I have three major reservations about the wisdom of pursuing a radical empiricist agenda for machine learning research. I will present three arguments why empiricism should be balanced with the principles of model-based science (Pearl, 2019), in which learning is guided by two sources of information: (a) data and (b) man-made models of how data are generated.

I label the three arguments: (1) Expediency, (2) Transparency and (3) Explainability and will discuss them in turns below:

1. Expediency

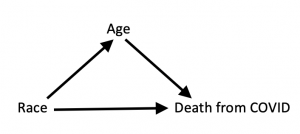

Evolution is too slow a process (Turing, 1950), since most mutations are useless if not harmful, and waiting for natural selection to distinguish and filter the useful from the useless is often un-affordable. The bulk of machine learning tasks requires speedy interpretation of, and quick reaction to new and sparse data, too sparse to allow filtering by random mutations. The outbreak of the COVID-19 pandemic is a perfect example of a situation where sparse data, arriving from unreliable and heterogeneous sources required quick interpretation and quick action, based primarily on prior models of epidemic transmission and data production (https://ucla.in/3iEDRVo). In general, machine learning technology is expected to harness a huge amount of scientific knowledge already available, combine it with whatever data can be gathered, and solve crucial societal problems in areas such as health, education, ecology and economics.

Even more importantly, scientific knowledge can speed up evolution by actively guiding the selection or filtering of data and data sources. Choosing what data to consider or what experiments to run requires hypothetical theories of what outcomes are expected from each option, and how likely they are to improve future performance. Such expectations are provided, for example, by causal models that predict both the outcomes of hypothetical manipulations as well the consequences of counterfactual undoing of past events (Pearl, 2019).

2. Transparency

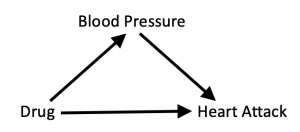

World knowledge, even if evolved spontaneously from raw data, must eventually be compiled and represented in some machine form to be of any use. The purpose of compiled knowledge is to amortize the discovery process over many inference tasks without repeating the former. The compiled representation should then facilitate an efficient production of answers to select set of decision problems, including questions on ways of gathering additional data. Some representations allow for such inferences and others do not. For example, knowledge compiled as patterns of conditional probability estimates does not allow for predicting the effect of actions or policies. (Pearl, 2019).

Knowledge compilation involves both abstraction and re-formatting. The former allows for information loss (as in the case of probability models) while the latter retains the information content and merely transform some of the information from implicit to explicit representations.

These considerations demand that we study the mathematical properties of compiled representations, their inherent limitations, the kind of inferences they support, and how effective they are in producing the answers they are expected to produce. In more concrete terms, machine learning researchers should engage in what is currently called “causal modelling” and use the tools and principles of causal science to guide data exploration and data interpretation processes.

3. Explainability

Regardless of how causal knowledge is accumulated, discovered or stored, the inferences enabled by that knowledge are destined to be delivered to, and benefit a human user. Today, these usages include policy evaluation, personal decisions, generating explanations, assigning credit and blame or making general sense of the world around us. All inferences must therefore be cast in a language that matches the way people organize their world knowledge, namely, the language of cause and effect. It is imperative therefore that machine learning researchers regardless of the methods they deploy for data fitting, be versed in this user-friendly language, its grammar, its universal laws and the way humans interpret or misinterpret the functions that machine learning algorithms discover.

Conclusions

It is a mistake to equate the content of human knowledge with its sense-data origin. The format in which knowledge is stored in the mind (or on a computer) and, in particular, the balance between its implicit vs. explicit components are as important for its characterization as its content or origin.

While radical empiricism may be a valid model of the evolutionary process, it is a bad strategy for machine learning research. It gives a license to the data-centric thinking, currently dominating both statistics and machine learning cultures, according to which the secret to rational decisions lies in the data alone.

A hybrid strategy balancing “data-fitting” with “data-interpretation” better captures the stages of knowledge compilation that the evolutionary processes entails.

References:

Buckner, C. (2018) “Deep learning: A philosophical introduction,” Philosophy Compass, https://doi.org/10.1111/phc3.12625.

Lipton, Z. (2015) “Deep Learning and the Triumph of Empiricism,” ND Nuggets News, July. Retrieved from: https://www.kdnuggets.com/2015/07/deep-learning-triumph-empiricism-over-theoretical-mathematical-guarantees.html.

Markie, P. (2017) “Rationalism vs. Empiricism,” Stanford Encyclopedia of Philosophy, https://plato.stanford.edu/entries/rationalism-empiricism/.

Pearl, J. (2019) “The Seven Tools of Causal Inference with Reflections on Machine Learning,” Communications of ACM, 62(3): 54-60, March, https://cacm.acm.org/magazines/2019/3/234929-the-seven-tools-of-causal-inference-with-reflections-on-machine-learning/fulltext.

Turing, A.M. (1950) I. — Computing Machinery and Intelligence,” Mind, LIX (236): 433-460, October, https://doi.org/10.1093/mind/LIX.236.433.

The following email exchange with Yoshua Bengio clarifies the claims and aims of the post above.

Yoshua Bengio commented Aug 3 2020 2:21 pm

Hi Judea,

Thanks for your blog post! I have a high-level comment. I will start from your statement that “learning is guided by two sources of information: (a) data and (b) man-made models of how data are generated. ” This makes sense in the kind of setting you have often discussed in your writings, where a scientist has strong structural knowledge and wants to combine it with data in order to arrive at some structural (e.g. causal) conclusions. But there are other settings where this view leaves me wanting more. For example, think about a baby before about 3 years old, before she can gather much formal knowledge of the world (simply because her linguistic abilities are not yet developed or not enough developed, not to mention her ability to consciously reason). Or think about how a chimp develops an intuitive understanding of his environment which includes cause and effect. Or about an objective to build a robot which could learn about the world without relying on human-specified theories. Or about an AI which would have as a mission to discover new concepts and theories which go well beyond those which humans provide. In all of these cases we want to study how both statistical and causal knowledge can be (jointly) discovered. Presumably this may be from observations which include changes in distribution due to interventions (our learning agent’s or those of other agents). These observations are still data, just of a richer kind than what current purely statistical models (I mean trying to capture only joint distributions or conditional distribution) are built on. Of course, we *also* need to build learning machines which can interact with humans, understand natural language, explain their decisions (and our decisions), and take advantage of what human culture has to offer. Not taking advantage of knowledge when we have it may seem silly, but (a) our presumed knowledge is sometimes wrong or incomplete, (b) we still want to understand how pre-linguistic intelligence manages to make sense of the world (including of its causal structure), and (c) forcing us into this more difficult setting could also hasten the discovery of the learning principles required to achieve (a) and (b).

Cheers and thanks again for your participation in our recent CIFAR workshop on causality!

— Yoshua

Judea Pearl reply, August 4 5:53 am

Hi Yoshua,

The situation you are describing: “where a scientist has strong structural knowledge and wants to combine it with data in order to arrive at some structural (e.g. causal) conclusions” motivates only the first part of my post (labeled “expediency”). But the enterprise of causal modeling brings another resource to the table. In addition to domain specific knowledge, it brings a domain-independent “template” that houses that knowledge and which is useful for precisely the “other settings” you are aiming to handle:

“a baby before about 3 years old, before she can gather much formal knowledge of the world … Or think about how a chimp develops an intuitive understanding of his environment which includes cause and effect. Or about an objective to build a robot which could learn about the world without relying on human-specified theories.”

A baby and a chimp exposed to the same stimuli will not develop the same understanding of the world, because the former starts with a richer inborn template that permits it to organize, interpret and encode the stimuli into a more effective representation. This is the role of “compiled representations” mentioned in the second part of my post. (And by “stimuli”, I include “playful manipulations”) .

In other words, the baby’s template has a richer set of blanks to be filled than the chimp’s template, which accounts for Alison Gopnik’s finding of a greater reward-neutral curiosity in the former.

The science of Causal Modeling proposes a concrete embodiment of that universal “template”. The mathematical properties of the template, its inherent limitations and inferential and algorithmic capabilities should therefore be studied by every machine learning researcher, regardless of whether she obtains it from domain expert or discovers it on her own from invariant features of the data.

Finding a needle in a haystack is difficult, and it’s close to impossible if you haven’t seen a needle before. Most ML researchers today have not seen a needle — an educational gap that needs to be corrected in order to hasten the discovery of those learning principles you aspire to uncover.

Cheers and thanks for inviting me to participate in your CIFAR workshop on causality.

— Judea

Yoshua Bengio comment Aug. 4, 7:00 am

Agreed. What you call the ‘template’ is something I sort in the machine learning category of ‘inductive biases’ which can be fairly general and allow us to efficiently learn (and here discover representations which build a causal understanding of the world).

— Yoshua